测试环境为阿里云,单核Intel(R) Xeon(R) CPU E5-2420 0 @ 1.90GHz,内存512M,最基础的配置,测试系统为CentOS 64位。测试数据就是把双色球所有组合进行排列,共17721088行,文件名为di.txt,体积363421k。leveldb推荐使用SSD硬盘,当前虚拟硬盘的速度肯定是不行,此处只是学习,测试对比。

官方网址

http://code.google.com/p/py-leveldb/

安装命令

svn checkout http://py-leveldb.googlecode.com/svn/trunk/ py-leveldb-read-only

cd py-leveldb-read-only/

# 需要注意,下面的脚本里需要使用git获取leveldb。所以要把git客户端装好。

./compile_leveldb.sh

python setup.py build

python setup.py install

python遇到的问题

报错

../snappy-read-only/snappy.h:45:33: error: snappy-stubs-public.h

解决:

yum -y install autoconf automake libtool

再次编译还是不成功,手动安装压缩工具。

网址:http://code.google.com/p/snappy/

使用命令

wget http://snappy.googlecode.com/files/snappy-1.1.1.tar.gz

./configure –enable-shared=no –enable-static=yes

make CXXFLAGS=’-g -O2 -fPIC’

make install

再次安装编译py-leveldb通过。

C语言编译问题

报错

/usr/bin/ld: cannot find -lleveld

collect2: ld 返回 1

解决

将.so .a拷贝到系统lib目录中,测试系统64位,直接拷贝到lib64中。

cp libleveldb.* /usr/lib64/

python测试部分

顺序写代码

#!/bin/python

#-*- coding:utf-8 -*-

# Filename:

# Revision:

# Date: 2013-12-14

# Author: simonzhang

# web: www.simonzhang.net

# Email: simon-zzm@163.com

### END INIT INFO

import leveldb

import time

db = leveldb.LevelDB('./data')

# multiple put/delete applied atomically, and committed to disk

#batch = leveldb.WriteBatch()

f = open('di.txt')

num = 0

start = time.time()

for i in f:

if i[-1] == '\n':

data = i[:-1]

else:

data = i

num += 1

db.Put("%s" % num, data)

end = time.time()

print "use sec %s" % (end-start)

批量写代码

#!/bin/python

#-*- coding:utf-8 -*-

# Filename:

# Revision:

# Date: 2013-12-14

# Author: simonzhang

# web: www.simonzhang.net

# Email: simon-zzm@163.com

### END INIT INFO

import leveldb

import time

db = leveldb.LevelDB('./data')

batch = leveldb.WriteBatch()

f = open('di.txt')

num = 0

start = time.time()

for i in f:

if i[-1] == '\n':

data = i[:-1]

else:

data = i

num += 1

batch.Put("%s" % num, data)

# 因为内存太小,每5万行写入一次

if ((num % 50000) == 0) or (num == 17721087):

db.Write(batch, sync = True)

batch = leveldb.WriteBatch()

end = time.time()

print "use sec %s" % (end-start)

随机读1000万次代码:

#!/bin/python

#-*- coding:utf-8 -*-

# Filename:

# Revision:

# Date: 2013-12-14

# Author: simonzhang

# web: www.simonzhang.net

# Email: simon-zzm@163.com

### END INIT INFO

import leveldb

from random import randint

import time

db = leveldb.LevelDB('./data')

start = time.time()

for i in xrange(10000000):

num = randint(1, 10000000)

try:

v = db.Get("%s" % num)

print v

except:

pass

end = time.time()

print "use sec %s" % (end-start)

测试结果

# python write_seq.py

use sec 329.217786074

每秒写入53827

# python write_bacth.py

use sec 173.626176119

每秒写入102064

# python read.py

use sec 288.070755005

每秒随机读取34713

C部分代码,为了方便,我把两个代码分开写。

C顺序写入

// Filename: // Revision: // Date: 2013-12-14 // Author: simonzhang // web: www.simonzhang.net // Email: simon-zzm@163.com // END INIT INFO #include#include #include #include #include "leveldb/c.h" char *itoa(int value, char *string, int radix) { int rt=0; if(string==NULL) return NULL; if(radix<=0 || radix>30) return NULL; rt = snprintf(string, radix, "%d", value); if(rt>radix) return NULL; string[rt]='\0'; return string; } int main() { leveldb_t *db; leveldb_options_t *options; leveldb_readoptions_t *roptions; char *err = NULL; char *read; size_t read_len; time_t start_time, end_time; // write file FILE *di; if((di=fopen("di.txt","r"))==NULL) { printf("can't open!\n"); return -1; } // OPEN leveldba options = leveldb_options_create(); // leveldb_options_set_error_if_exists(options, 1); // leveldb_options_set_cache(options, cache); // leveldb_options_set_env(options, env); // leveldb_options_set_info_log(options, NULL); // leveldb_options_set_write_buffer_size(options, 100000); // leveldb_options_set_paranoid_checks(options, 1); // leveldb_options_set_max_open_files(options, 4096); // leveldb_options_set_block_size(options, 1024); // leveldb_options_set_block_restart_interval(options, 8); // leveldb_options_set_compression(options, leveldb_no_compression); leveldb_options_set_compression(options, leveldb_snappy_compression); leveldb_options_set_create_if_missing(options, 1); db = leveldb_open(options, "data", &err); if (err != NULL) { fprintf(stderr, "Open fail.\n"); return(1); } leveldb_free(err); err = NULL; roptions = leveldb_readoptions_create(); // start read start_time = time(NULL); int X=99,Y=15000000; //X为起始值 Y为终止值 int _rand;//随机数 char s[8]; srand( (unsigned)time( NULL ) ); for (int i = 0; i<10000000; i++) { _rand = rand()%(Y-X+1)+X; itoa(_rand,s,8); read = leveldb_get(db, roptions, s, strlen(s), &read_len, &err); //printf("%s\n", read); if (err != NULL) { fprintf(stderr, "Read fail.\n"); return(1); } leveldb_free(err); free(read); } // CLOSE` leveldb_close(db); end_time = time(NULL); printf("%ld\n", end_time-start_time); return 0; }

C 1000万次随机读

// Filename: // Revision: // Date: 2013-12-14 // Author: simonzhang // web: www.simonzhang.net // Email: simon-zzm@163.com // END INIT INFO #include#include #include #include #include "leveldb/c.h" char *itoa(int value, char *string, int radix) { int rt=0; if(string==NULL) return NULL; if(radix<=0 || radix>30) return NULL; rt = snprintf(string, radix, "%d", value); if(rt>radix) return NULL; string[rt]='\0'; return string; } int main() { leveldb_t *db; leveldb_options_t *options; leveldb_writeoptions_t *woptions; char *err = NULL; time_t start_time, end_time; // write file FILE *di; if((di=fopen("di.txt","r"))==NULL) { printf("can't open!\n"); return -1; } // OPEN leveldba options = leveldb_options_create(); // leveldb_options_set_error_if_exists(options, 1); // leveldb_options_set_cache(options, cache); // leveldb_options_set_env(options, env); // leveldb_options_set_info_log(options, NULL); // leveldb_options_set_write_buffer_size(options, 100000); // leveldb_options_set_paranoid_checks(options, 1); // leveldb_options_set_max_open_files(options, 4096); // leveldb_options_set_block_size(options, 1024); // leveldb_options_set_block_restart_interval(options, 8); // leveldb_options_set_compression(options, leveldb_no_compression); leveldb_options_set_compression(options, leveldb_snappy_compression); leveldb_options_set_create_if_missing(options, 1); db = leveldb_open(options, "data", &err); if (err != NULL) { fprintf(stderr, "Open fail.\n"); return(1); } leveldb_free(err); err = NULL; woptions = leveldb_writeoptions_create(); // start write start_time = time(NULL); char ch, str[30]; int num=0, c=0; ch = fgetc(di); while(ch!=EOF) { if (ch == '\n') { char s[10]; itoa(num,s,10); leveldb_put(db, woptions, s, strlen(s), str, strlen(str), &err); if (err != NULL) { fprintf(stderr, "Write fail.\n"); return(1); } memset(str,'\0',sizeof(str)); c = 0; num += 1; } else { str[c] = ch; c += 1; } ch = fgetc(di); } fclose(di); // CLOSE leveldb_close(db); end_time = time(NULL); printf("%ld\n", end_time-start_time); return 0; }

测试

C顺序写入

编译

gcc -Wall -std=c99 write-leveldb.c -lleveldb -O3 -o write-leveldb

结果

# ./write-leveldb

225

每秒钟处理78760

C 1000万次随机读

编译

gcc -Wall -std=c99 read-leveldb.c -lleveldb -O3 -o read-leveldb

结果

# ./read-leveldb

143

每秒处理69930

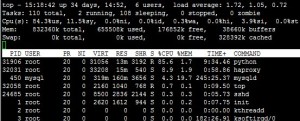

写入过程CPU肯定是全部跑满。使用snappy压缩,所以写入data目录为175M,压缩了一半。

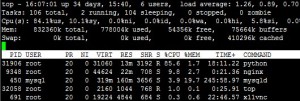

随机读将CPU跑满。python内存占用23%。C语言占用内存最终增加到39%。

之后又做到了一个测试,硬件内存只有512M,硬盘数据插入826M。使用python代码再次随机读取1000万次,使用347.94秒,每秒随机读28740。所以数据超出物理内存不会出错只是速度下降。

还有问题一没有测试,leveldb默认的每块2M如果64G则数据文件65536个,达到系统打开文件最大数,不知道会不会出问题。并且在同一个目录下文件过多也会对系统改造成一定压力,不知道是否会有影响。推荐使用办法把单块体积加大,此效率也没有测试。

还有一点说明,使用pypy做了测试,效果不如python,具体原因没有详查。